Remember the existential dread when ChatGPT first dropped? One day, AI was a sci-fi trope; after that was writing your emails and assignments; that whiplash was not due to some sudden alien-intelligence but the product of a fundamental architectural shift. One that soaked up the internet and learned to predict the next word with terrifying accuracy. The secret isn’t sentience; it’s statistics on a scale so vast it defies human comprehension.

At its core, a Large Language Model is a prediction engine. Think of it as the world’s most overqualified autocomplete. You give it a prompt, and it calculates the probability of every possible word that could come next. To build a chatbot, you prime it with a script of a helpful AI assistant and let it autocomplete the conversation. The “magic” emerges from sheer scale: training on text that would take a human millennia to read, tuning hundreds of billions of internal parameters.

Before we dive into the mechanics, our first video breaks down how AI, machine learning, and deep learning all fit together in the grand scheme of things.

How AI, ML, and Deep Learning Fit Together

The real genius lies in the “transformer” architecture. Introduced by Google in 2017. Unlike older models that processed text sequentially (like a slow reader), transformers analyze all words in a prompt simultaneously. They use a mechanism called “attention” to weigh the importance of each word in relation to all others allowing the model to understand contextual nuance. This parallel processing, run on massive arrays of GPUs, is what enables today’s breathtaking speed and coherence.

| Feature | Old Sequential Models | Transformer Models | Why You Care |

| Text Processing | Word-by-word (slow) | All words at once (fast) | Responsive, real-time chatbots |

| Context Understanding | Limited window | Full-sentence context | Nuanced, relevant responses |

| Training Efficiency | Low | Extremely High | Faster innovation, wider access |

Compared to its predecessors, the transformer is less a step forward and more a quantum leap. Older recurrent neural networks were notoriously slow and forgetful, struggling with long sentences but the transformer’s parallel nature eliminated this bottleneck. While RNNs processed language like a slow and forgetful reader. Transformers absorb and contextualize information like a seasoned scholar cross-referencing an entire library at once. The difference in output quality is night and day.

But how do these models actually learn? Our next video crams a semester’s worth of machine learning algorithms into 17 brilliantly concise minutes.

All Machine Learning Algorithms Explained

Of course, a model that just predicts the next word based on the entire internet would be a chaotic, often horrific, mess. This is where Reinforcement Learning from Human Feedback comes in. After pre-training, human workers meticulously rate the model’s outputs steering it toward helpful, harmless and honest responses. This fine-tuning is the difference between an edgy Reddit commenter and a polished, usable assistant. Many tools offer freemium tiers but full power typically requires a paid subscription. The key “spec” is parameter count; for example, 175 billion for GPT-3; yet training data quality and reinforcement learning are just as crucial.

The computational cost is the true barrier to entry. Training a top-tier model requires so many calculations that doing it on a single modern computer would take over 100 million years; this concentration of resources in a handful of tech giants shapes the entire ecosystem dictating whose values get baked into the models we all use. It’s less a skynet takeover and more a brutally expensive computer war that centralizes power.

Large Language Models Explained Briefly

So, the next time an AI helps you, remember: you’re not conversing with a mind. You’re navigating a labyrinth of probabilities, a reflection of our own language curated by an army of silicon chips and annotators; it is not artificial intelligence rather artificial competence on a really massive scale. Ignore the hype; the real story is the engineering marvel not the emergence of a silicon soul.

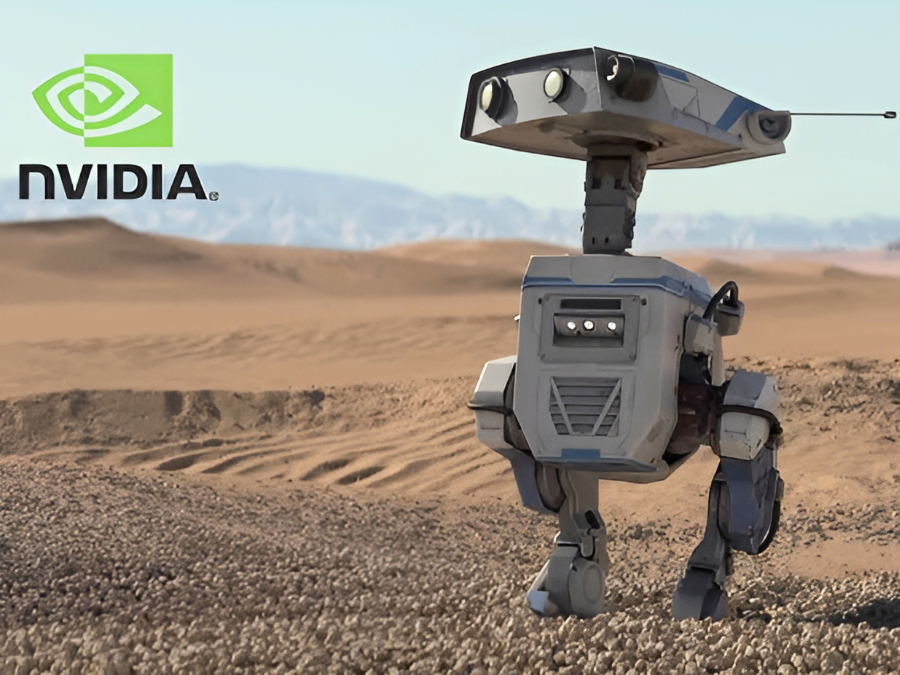

Pingback: GR00T N1 by NVIDIA: Shaping the Future of AI in Robotics

Pingback: The Rise of Heterogeneous Computing: Why GPUs, TPUs, and NPUs Are Taking Over - The Circuit Daily | Elite Tech Insights